I was recently asked to take a look at a new social media app called Sarahah by Kate Russell and whilst I was being talked through the basics of the app I couldn't help but poke around and notice a few security issues...

The App

You can get a native application on iOS and Android but my effort was focused on the web app because it was the easiest to test. At the time of writing it's currently rated as the number 1 app on the App Store and is number 1 in more than 10 countries on Google Play too. It's fair to say it's pretty popular and I wouldn't be surprised if it hadn't already had a fair amount of testing.

The Issues

One of the things that struck me right away was how simple the interface was. The app is designed so you can create an account and send messages to other users, anonymously if you wish. That's really all there is to the platform so the entire functionality can be summarised as send, receive, favourite and delete messages. The first step is to fire up Fiddler and proxy all traffic through there so I can see exactly what's happening with the traffic.

Sequential ID numbers

Wherever I look within the app it seems to use sequential ID numbers for just about everything. Whilst this isn't an immediate problem it's generally bad practise to use something predictable like this. For example, if I send myself a few messages in quick succession I can see the ID number incrementing. With just 3 messages sent one after the other I ended up with the ID numbers for each message being 614680370, 614681834 and 614683106.

Bypassing CSRF protection

The app does deploy native CSRF protection but it turns out that in a lot (almost all) circumstances it's fairly trivial to bypass. Endpoints like /Messages/FavoriteAjax and /Messages/DeleteAjax can be called as GET requests instead of POST requests and only require the id parameter in most cases. This makes it pretty trivial to craft a link to send to someone or launch a CSRF attack from any page you can get the victim to visit. If you take this issue and couple it with the sequential ID numbers above it makes it fairly easy to send Kate a message, predict the message ID and then craft a CSRF attack to have her favorite that message so it's nice and prominent in her account.

XSS

It seems I'm not the only researcher taking a look at Saraha as another researcher found a stored XSS vulnerability in the site too. It was simple a case of sending the script payload as a message and ensuring the user had to scroll down to AJAX the new message into the page. This was easily achieved by sending multiple messages after your XSS payload had been sent but I kind of found this by accident after sending various test messages to Kate. I then came across the linked blog post when searching Google.

Filtering

The app does seem to have some rudimentary filtering in place to prevent abuse of other members. We've seen similar issues with abuse in the past on sites like ask.fm where being able to send anonymous messages brings out the worst in people. For that reason I imagine they've taken some basic steps to try and stop that but the filtering really isn't great. As far as I can tell it does substring matching on a few keywords and drops the message. It doesn't indicate to you that they've dropped the message, everything appears like it worked just fine, but if you want to send a message to a friend saying I would kill for a cheeseburger right now it will tell you the message was sent but it was actually discarded. It's trivial to bypass the filter because I would .kill for a cheeseburger right now will be sent just fine. What's even worse is that they appear to have fixed the above XSS issue by adding script to the filter list because Can I read your movie script tonight? is filtered as a message now...

No rate limiting

The application doesn't seem to deploy rate limiting anywhere on the site and this is especially problematic when sending messages. Given the nature of the site and the ability to send totally anonymous messages, I was able to send several hundred abusive messages to Kate in just a couple of seconds. With no way to bulk delete messages Kate would have to sit there and delete each of these one by one.

Password reset

When triggering a password reset for an account, done by providing the email address, the password is immediately changed and emailed to the user. This isn't an ideal way to perform a password reset as I can use it to become an inconvenience by resetting the password for accounts I don't own. Instead a password reset link should be sent to the user to use if they need it and were the ones that requested it. By running a script in a loop every 30 seconds I'm able to constantly reset the password to Kate's account which would very quickly become a major annoyance.

Account lock out

Another problem on the site stems from the limit on how many times you can attempt to login to an account. You can attempt to login a maximum of 10 times before the account is locked for a duration of time (this is weird because the amount of time seems to vary unreliably). This is another potential problem because once I find out your email address I can attempt to login 10 times and lock you out of your own account. A simple update to the script I used above for the password reset and I can change your password and then lock you out of your account too.

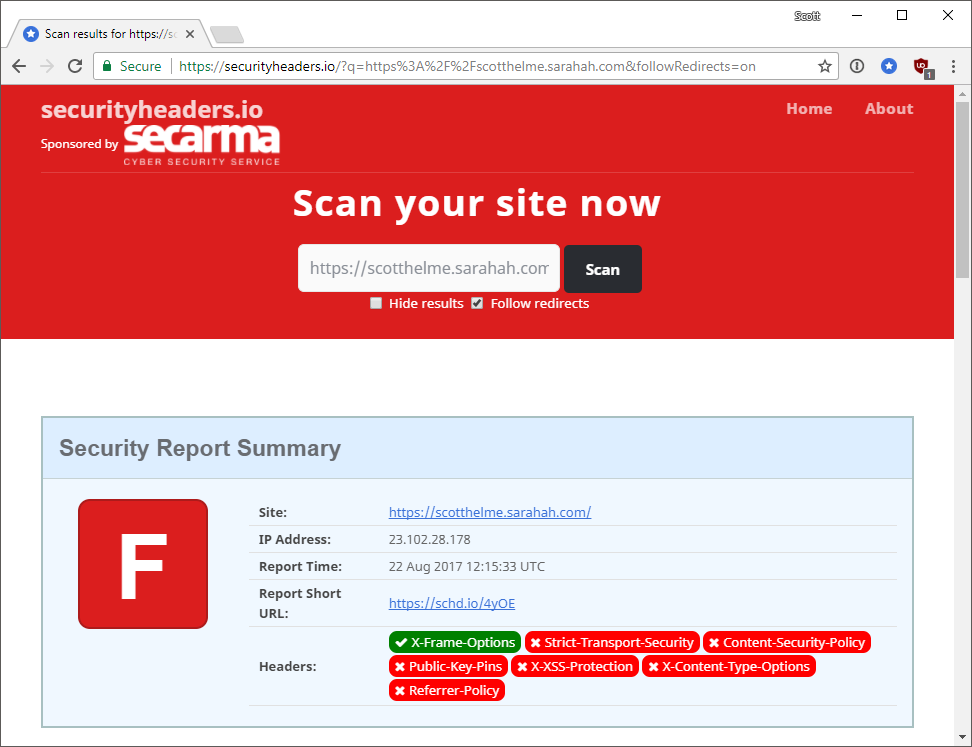

Security headers

You'd hope that a brand new social media platform would have a fairly robust deployment of various security features, including security headers, but sadly they don't.

The site can't deploy features like HSTS because they switch between serving over HTTP and HTTPS as you browse.

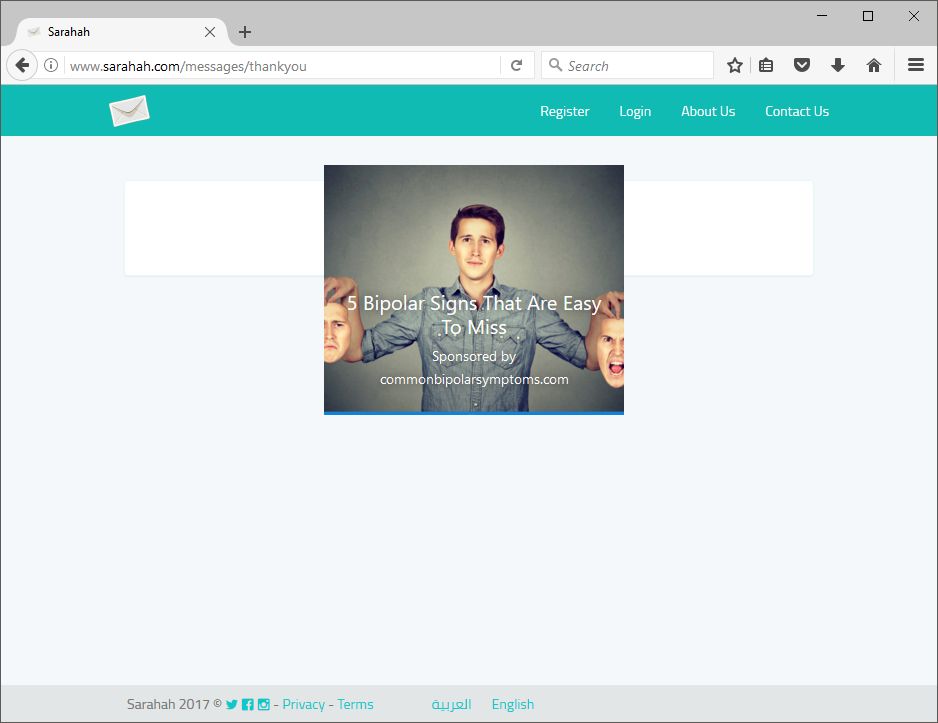

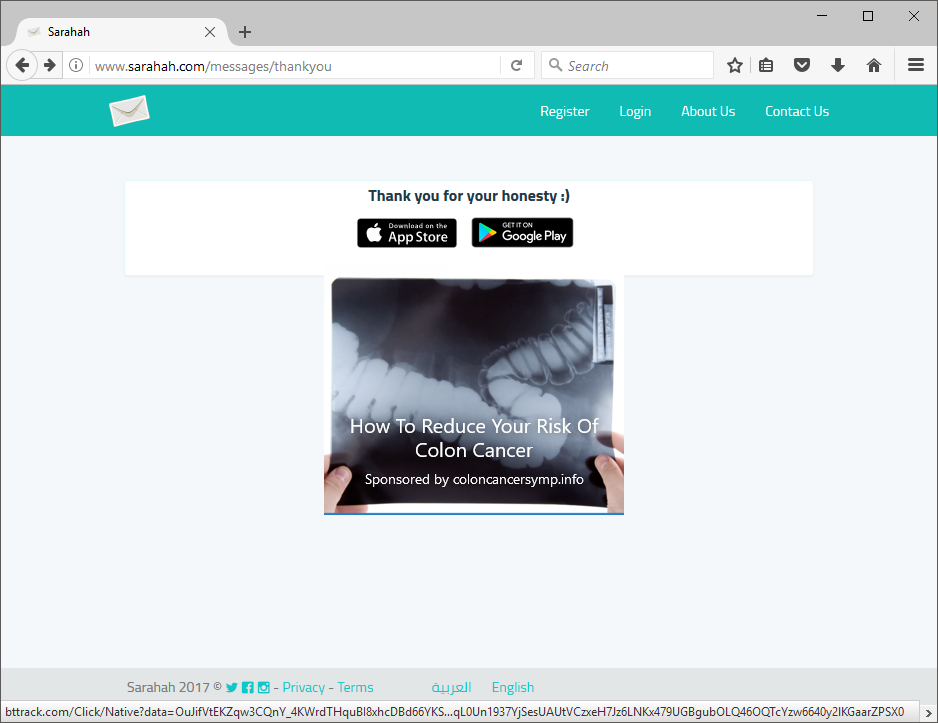

Switching to HTTP

Every time you send a message on Sarahah you're redirected to a HTTP page that contains an advert on it. I assume this is how they're generating revenue from the site.

If you hover over the advert it moves to revel a heart warming message.

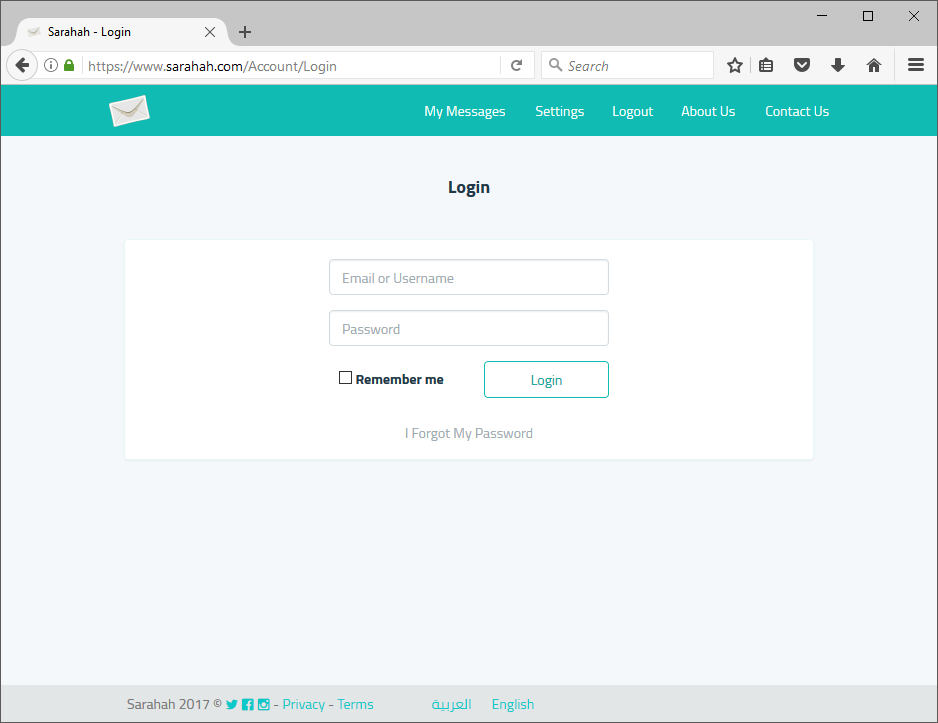

Despite the terrible approach here, I'm not entirely sure why they need to redirect to HTTP to display an advert, the session cookie is marked as secure so the browser doesn't include it on the insecure request. This means on the thank you page you're not actually logged in. If you click Login it takes you to the login page, which is secure, so you are now authenticated because the cookie was sent but the site doesn't handle that properly and still presents the login page to you.

The whole process feels pretty clunky.

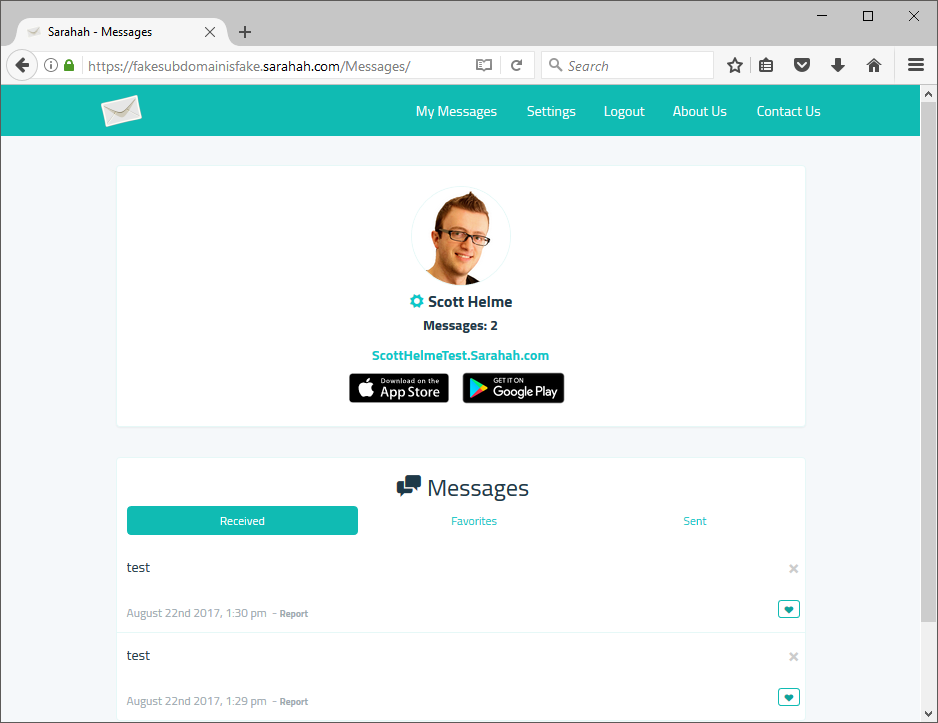

Use any subdomain you like

This one doesn't present any problem, not that I've found anyway, but you can use any subdomain that you like when navigating your account section.

I'm not exactly sure why you'd want to do this but I'd have thought that the site should probably redirect you to your own subdomain when navigating like this. Again, I've not found any problem linked to this, but I also can't see a requirement for it other than they've just left it how it is.

Disclosure timeline

Once I'd found and confirmed the issues I began the process of reaching out to Sarahah to responsibly disclose them so they could begin work on fixing the problems. Their response wasn't great and it took a while to actually get some information on who to send the report to. Once I did send it, their responses were short and always required chasing. I got the impression they weren't taking the emails very seriously.

Aug 8th: Email sent to [email protected]

Aug 8th: Twitter DM to @Saraha_com, automated response.

Aug 9th: Follow up email sent to [email protected]

Aug 9th: Follow up to Twitter DM, automated response.

Aug 10th: Facebook message sent to @sarahahapp.

Aug 11th: Follow up to Facebook message.

Aug 18th: Public tweet asking for help to disclose.

Aug 19th: Received a Twitter DM providing *snip*@sarahah.com for disclosure.

Aug 21st: Email sent to *snip*@sarahah.com with full details of all issues, cc to Kate.

Aug 30th: Kate chased email, no response.

Sep 6th: Follow up to Kate's email, still no response.

Sep 6th: Response from Zain, "Thanks a lot Scott. We are doing our best to remedy these issues.".

Sep 6th: Responded asking for more details, current progress, ETA for completion.

Sep 29th: Chased previous email, no response.

Sep 29th: Response from Zain, "We have passed this to our developer and most of the issues have been fixed. Thank you".

Sep 29th: Responded to ask for details of which issues, were others being fixed, ETA for completion.

Oct 23rd: No response, blog published.

As we've seen in the past it's very common for anonymous messaging services and platforms to be used for abuse and bullying. It's not just Kate and I that have these concerns either, it's already been discussed widely in the press too. Given the nature of the service and the young demographic of their users I would have hoped the company was responsive and engaged in the disclosure process but I feel it was almost the opposite.