When building https://report-uri.io my biggest concern was how I was going to store and query the potentially huge amounts of data that the application could have to deal with. This blog covers the how and why of my decision to use Azure Table Storage.

Introduction

When I first had the idea to build https://report-uri.io, the biggest thing that jumped out at me was that there could be potentially huge amounts of inbound data that would need to be logged, stored and queried in an efficient manner. Doing some quick research it's obvious that most of the time, sites shouldn't really be generating CSP or HPKP violation reports, or so I thought. Once you have setup and refined your policy, you'd expect not to be getting any reports at all unless there was a problem, but this turned out not to be the case. Even excluding things like malvertising, ad-injectors and advertisers serving up http adverts on https pages, which I see a steady stream of constantly, there were things like policy misconfiguration and a genuine XSS attack that could also cause reports to be generated and sent, potentially in huge numbers. Every browser that visits a page with a violation would send a report and there could, and regularly is, multiple violations on a single page. Multiplied by a few heavily trafficked sites and you could very quickly have hundreds if not thousands of reports flooding in every single minute.

SQL Database

My first thought, as is fairly typical when one thinks 'I need a database', was towards the time tested SQL Server (or MySQL depending on your preference). Having had plenty of interactions with SQL Server in the past, I knew that it was more than capable of handling the simple requirements of a site like this. That said, I was also aware that the requirements of running a high performance and highly available database can be quite demanding. I knew I was going to want someone else to take care of this for me so I started looking around at different cloud providers. It became apparent pretty quickly that SQL Server in the cloud was fairly pricey for the budget I had in mind for the site!

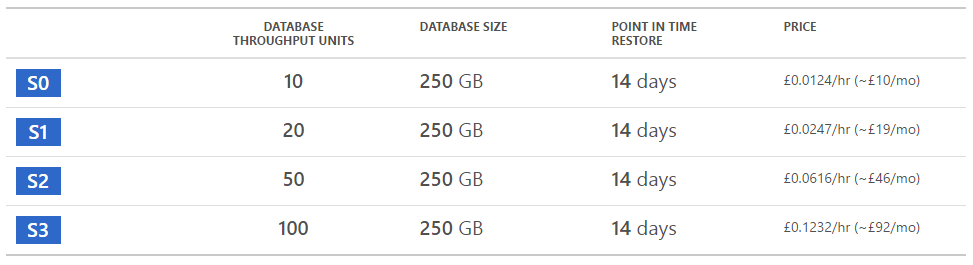

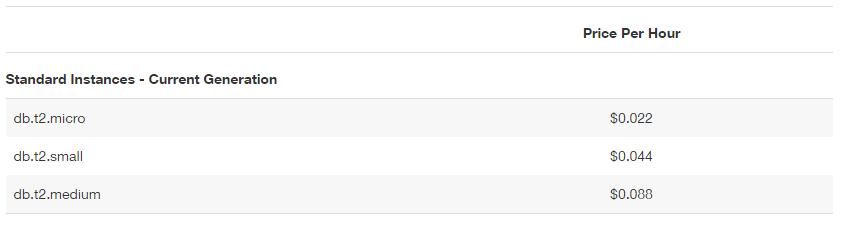

SQL Azure was coming in at between £46 and £92 a month for a database capable of handling just a few thousand transactions a minute. Relatively cheap to some I have no doubt, but considering that all I'd looked at so far was the cost of the database, it wasn't a great start. Amazon also have their own offering of various flavours of RDBMS hosting but again, for a reasonable level of throughput and performance, I was looking at starting prices in the £40 - £50 a month region just to meet some basic needs.

My largest concern with having a fixed throughput would be the easy ability for an attacker to saturate it given the nature of the site. If the database is only provisioned for 5,000 transactions per minute, the number of inbound reports, queries against the data and my session store (more on that in another blog) could be quite demanding and if the database becomes unavailable, the whole site stops working. I needed something without the throughput restrictions and a lot cheaper.

NoSQL Database

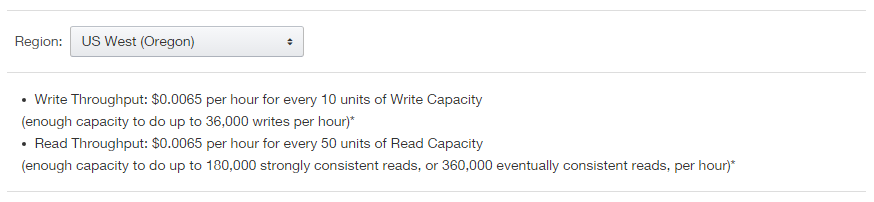

Having used MongoDB for one of my previous projects the next logical step was to look and see what what was available in terms of NoSQL databases. Again, the hosted solutions seemed to be fairly pricey and were constrained by the typical CPU/RAM tiers or just a given performance metric. With great database as a service offerings from both Amazon and Microsoft in the form of DynamoDB and Table Storage respectively, I fired up a small test on both to try them out. One of the first things that cropped up with DynamoDB was the provisioned throughput again. You aren't actually billed for the transactions you make, you're billed to have a maximum available throughput after which transactions will start to fail. If you don't use them, you're still paying for them, but as soon as you go over the limit, you're in trouble. This means that you'd need to provision a good portion above your average requirements to be able to handle bursts in traffic.

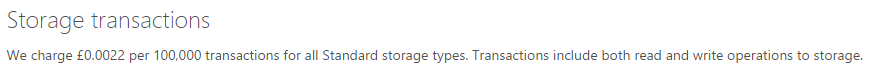

Still, it's a little cheaper at ~£30 a month for the equivalent level of throughput as the SQL Server database mentioned above, but, we still have that maximum throughput limit. Microsoft do things a little differently with Table Storage in Azure and you're only billed for the transactions you actually use, there is no concept of provisioning for throughput. Each storage account can use as much or as little of the of the scalability limits as is required, and you never pay any more or less, just the per transaction cost.

Microsoft Azure Table Storage

Having been fairly impressed with my initial testing of Table Storage, I decided to throw some numbers on a piece of paper and see what the costs were going to come out at. Each storage account has a performance target of 20,000 transactions per second. Yes, 20,000 per second! That means that my application can perform up to this limit with 1 restriction. There is a 2,000 transaction per second target on a Partition, which is similar to the concept of a table in a traditional relational database. This shouldn't be a problem as long as the data is partitioned properly, a note for later on. Beyond this though, there aren't any other limitations. If you make 1 transaction in a second you pay the cost of 1 transaction, if you make 1,000 transactions in a second you pay the cost for 1,000 transactions. There are no penalties or additional costs as your throughput increases. The really staggering part is that the cost of a single transaction is £0.000000022, or, to make that a bit easier to get your head around, £0.022 per 1,000,000 transactions. Not only is the incredibly low cost really attractive here, the requirements of my application don't really fit very will with being fixed into a set throughput limit, and Table Storage does away with that.

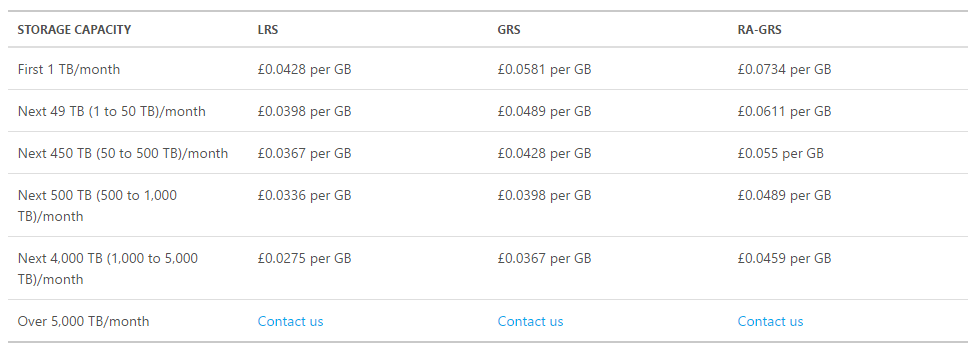

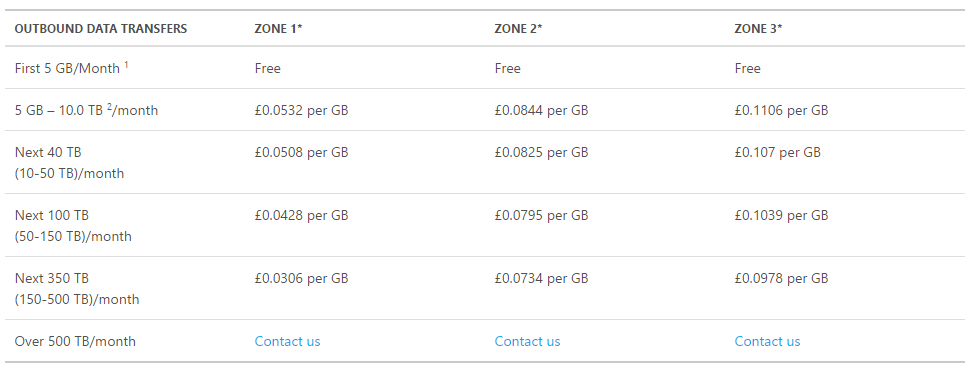

Beyond this, the only additional cost, like all other providers, is storage space for the database and outbound bandwidth, both of which are again billed based on exactly what you use without any limits or requirements to provision allowances. Data storage is billed at £0.0581/GB/month and the first 5GB of outbound bandwidth is free with a cost of £0.0532/GB after that.

To sum all of this up with a really simple example, I drew up the following.

To store 5Gb of data, with 5Gb of egress and to issue 10 million transactions against that data would cost: £0.5105 per month. That's less money that I lose down the side of the couch each month!

Even if we get really silly with these numbers and put 100Gb in the database with 100Gb of egress and issue 200 million transactions against the data, we're still only talking £15.264 per month! That equates to an average of about 4,629 transactions per minute, a fraction of any other quote from other providers and proved attractive enough to tip the balance in favour of Azure Table Storage.

What's the catch?

Well, there isn't really a catch, as such, but Table Storage does have a very limited feature set when compared to something like SQL Server. That's no to say it's a bad thing, but it can be difficult not having some of the things that you're typically used to. You can read up much more on the difference between the two in Azure Table Storage and Windows Azure SQL Database - Compared and Contrasted. There are no foreign keys for example, joins and stored procedures don't exist either, but the biggest thing for me to get my head around was the lack of a row count feature. In Table Storage if you want to keep track of your row count, you have to keep track of it yourself. If you don't keep track of your row count the only way to obtain it is to query out your entire dataset and count the records in it. That's an incredibly slow, inefficient and arduous task! In coming blogs I'm going to be covering a lot of the problems that I hit whilst trying to adapt to using Table Storage and how I adapted my implementation of the service to get the best possible performance and scale out of it. Keeping track of the count of incoming reports, querying against potentially huge datasets efficiently, offloading my PHP session storage to Azure so that I could have truly ephemeral application servers behind my load balancers and much, much more.