Whilst building https://report-uri.io I knew that I was going to need some form of load balancing to be able to accommodate the kind of load I wanted to achieve. Having decided not to use a PaaS like Azure App Service and leverage the lower costs of IaaS on DigitalOcean, I would be responsible for session handling and storage myself. This is where Azure Table Storage stepped in again and became my centralised session store.

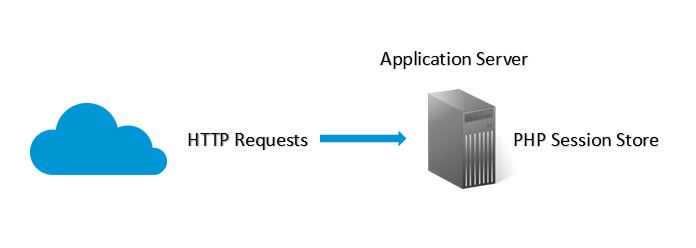

How PHP sessions normally work

When visiting a website that utilises PHP sessions, you typically have a session created for you that is stored on the local disk of the server. Each time you access a page your browser sends your cookie that contains your session ID and the server knows which session is yours, and thus who you are.

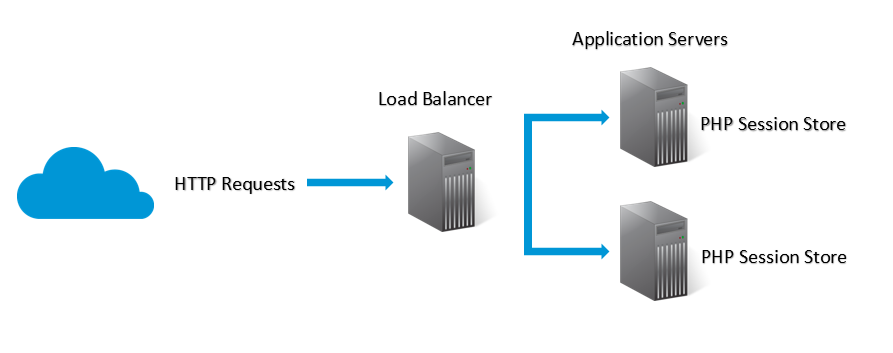

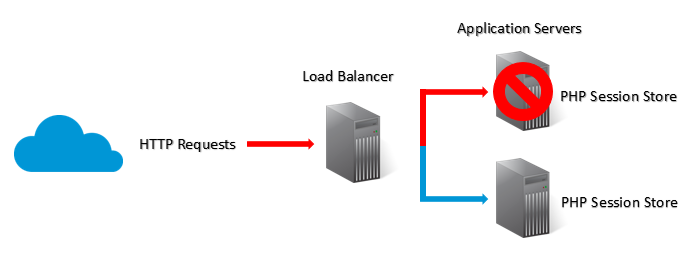

Having the sessions stored on the same server as the application isn't a problem in your typical single server environment, but things start to get a little trickier as you introduce more servers into the mix. If the session is stored on the server and any of your subsequent requests are handled by a different server, it will have no knowledge of your session or who you are. This means that if you were logged in or had any data in your session, it will have been lost.

Scaling up

When websites start to scale and more application servers are introduced, the problem of where to store your sessions becomes apparent. If you want to continue using local storage on the server for holding your sessions, any load balancing needs to take this into account and ensure that any subsequent requests that you make are passed to the same application sever. This is known as sticky sessions and is a fairly common feature on many load balancers. It's helpful because it requires no additional configuration or any centralisation of your sessions, but it does come with some drawbacks.

Once your session has been created on an application server, the load balancer needs to pass all of your subsequent requests to that same application server. This will most likely be fine in a lot of scenarios but there are a few problems that can crop up. The whole point of load balancing is to distribute load. Should the particular application server you are using suddenly start suffering with a heavy load, with sticky sessions, the load balancer can't pass your requests anywhere else and you have to keep using the server that could be struggling along with slow response times.

Not only that, but if the application server that your session is stored on goes offline, your session goes with it. Both of these situations can lead to a very bad user experience and we need to remove the dependency on the application servers.

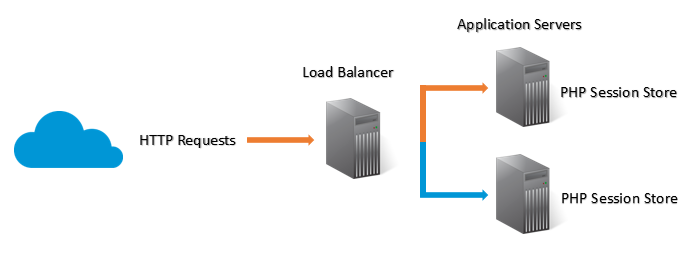

Offloading your session storage

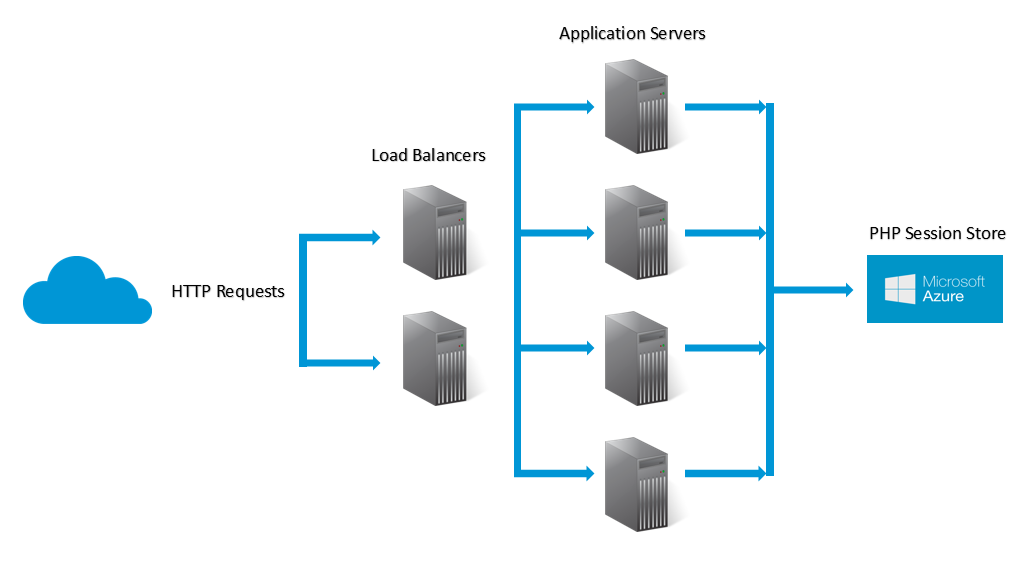

You can offload your session storage requirements in many ways and popular choices include memcached or an RDBMS. As I was already using Azure Table Storage for my main data storage requirements, it seemed like a logical choice for my session storage too. Looking at how the whole process works a little more, when a server wants to look up a session, the lookups happen using the session ID. This is something that can be made blisteringly fast in Table Storage by using the session ID as the Row Key and presented a really fast, powerful option. There are a few examples out there for using Table Storage as your PHP session store but some were written for older versions of the SDK and others didn't leverage all of the features Table Storage had to offer, like batch operations or the built in timestamp, where possible. I ended up writing my own but really, it wasn't that large a task and I had it done in less than 130 lines of code. What now happens instead of an application server writing sessions to the local disk is that sessions get written into my Sessions table in Azure.

This is pretty much what https://report-uri.io looked like at launch and is exactly how I handle my session storage. When a session is created the application server creates the session in Azure. Now, as any subsequent requests hit the load balancers, they will pass the request to the application server with the least load so the visitor gets the best possible response time. The application server will lookup the session in Azure using the session ID passed in with the cookie and fetch the contents of the session. On average, because I'm using a Row Key to lookup the session, Azure will give me the session in less than 100ms, including network latency! I don't have to worry about scale, provisioning or maintaining a database or even what throughput I may require, it's all taken care of. The other great thing that you gain from offloading your session storage is that your application servers are now truly ephemeral. That is, absolutely nothing of any consequence is stored on them and they can be created or destroyed on a whim. If one of the application servers has a problem or is showing slow performance, it can simply be terminated and nothing will be lost. This means that you can bring more servers online and take servers offline at the drop of a hat and not have to worry about synchronising your sessions, losing them or anything else.

The code

In the interest of helping others to harness the power of Azure Table Storage for their PHP Session Storage, and to get some much needed feedback on my implementation, I have decided to publish my code right here on GitHub. There isn't really a great deal to take care of in all honesty but I wrote my own to leverage a couple of features that I thought were missing from any other implementation I could find out there. Those were the built in timestamps that Azure gives us on all entities in Table Storage and batch transactions.

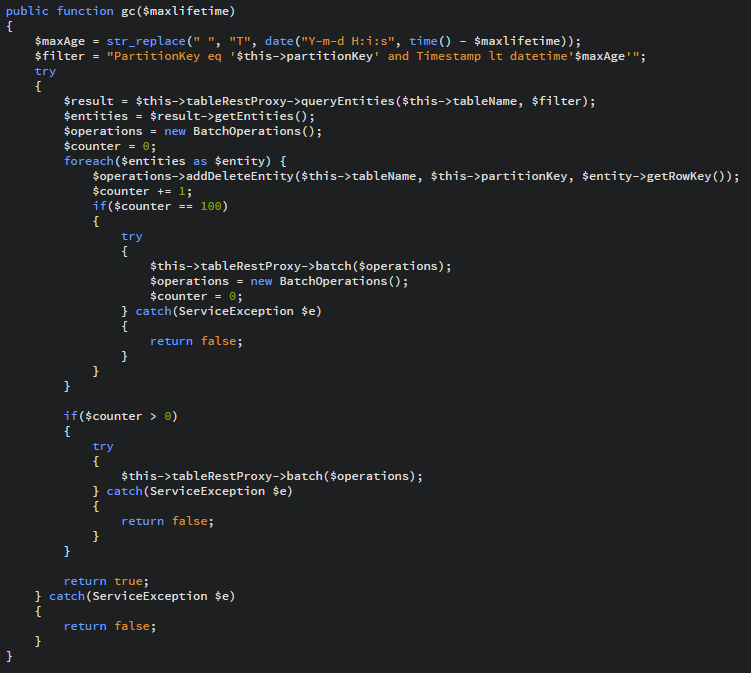

Garbage Collection and expired sessions

Depending on your specific PHP configuration you can alter the frequency of garbage collection and it's all down to two settings in your php.ini file, session.gc_probability and session.gc_divisor. Each time a PHP session is started there is a session.gc_probability / session.gc_divisor (default 1/100) chance that the garbage collector will be called. When it is, it calls the gc() method and passes in the $maxlifetime value, which is defined in session.gc_maxlifetime. Any session that hasn't been accessed for the preivous $maxlifetime seconds should be deleted. Here is my implementation.

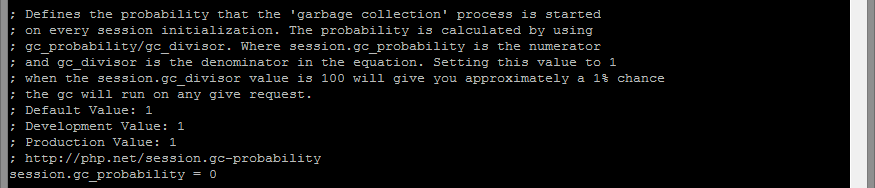

A quick point to note on Ubuntu that might save you a lot of debugging is that the PHP garbage collection is disabled by default as the value of session.gc_probability is set to 0 instead of 1. This results in no garbage collection taking place and makes it look like your garbage collection isn't working at all!

If you're using PHP under Ubuntu (or a Debian distro) check your php.ini file and make sure that you don't have a similar problem, it might save you a lot of time.

Using System Properties

One of the first things that I've done differently is leverage the built in property called Timestamp that is maintained for us by Azure. Every time an entity is modified, Azure will increase the value of Timestamp. This means I don't need to add and maintain my own property which reduces storage and bandwidth costs along with faster transaction times due to a smaller payload.

I'm using the Timestamp to look for sessions that haven't been accessed within the allotted time so they can be deleted. Now, as I'm using a Partition Key and no Row Key, it means that the query will have to do a full partition scan. That's not the most ideal scenario but it can't really be avoided in this situation, and it's not as bad as it could be. Once the query returns, I will have up to 1,000 entities (the maximum Azure can return in a single query) that are expired sessions. The next step is deleting them all.

Batch Operations

To reduce the cost of transactions against Azure, and to improve performance, you can batch operations together into groups of 100. Looking at our query above that could have returned a potential 1,000 expired sessions, that would be 1,000 delete operations against Azure that I'd be billed for. Using batch operations this whole set can be deleted with only 10 transactions and in a fraction of the time by simply reducing the number of HTTP round trips. That's a 90% reduction in cost and a considerable boost on the performance side of things too! The only limit on batching transactions is that they all have to be in the same partition, which isn't a problem for my session store.

Continuation Tokens

For those familiar with Table Storage, you may have noticed that I haven't implemented the use of Continuation Tokens. These aren't handled for you in the Azure PHP SDK and are something you need to handle yourself. I mentioned above that the query could only return a maximum of 1,000 entities and this is where the continuation token comes in. There are other circumstances in which Azure will issue you a continuation token that include a query executing for more than 5 seconds or if you cross a partition boundary. In this case you issue the same query against Azure again and pass in the continuation token to carry on from where you left off. Normally this is what you would want to do, and it is what I do in all other queries, but in the garbage collection I'm happy enough with deleting up to 1,000 entities on each call. Besides, if there aren't more than 1,000 sessions to be deleted, the continuation token isn't issued anyway. The garbage collector is called on every 100th session creation (on average) so to delete up to 1,000 old sessions each time should be sufficient to ensure I don't build up a back log of old sessions in the store. It also reduces the burden each time the gc() method is called by reducing the work that has to be carried out with the 1,000 limit and spreading it a little more evenly.

Read More: Choosing and using Azure Table Storage for report-uri.io - Working with Azure Table Storage - The Basics