In line with our constant desire to improve and offer the best service we can, Report URI recently went through an independent penetration test as many other companies and organisations do. Unlike many other companies and organisations though, I'd like to talk about our experience publicly and share our results with you!

What is a Penetration Test?

According to Wikipedia, a Penetration Test is described as the following:

A penetration test, colloquially known as a pen test, pentest or ethical hacking, is an authorized simulated cyberattack on a computer system, performed to evaluate the security of the system.

In short, a 'pen test' is where you pay a group of highly skilled security experts to try to hack into your application to see if they can break it, steal data or do a host of other unsavoury things. The National Cyber Security Centre here in the UK also describe a pen test similarly:

A method for gaining assurance in the security of an IT system by attempting to breach some or all of that system's security, using the same tools and techniques as an adversary might.

An organisation can have one of many motivations for needing or wanting a penetration test, including contractual or regulatory requirements, and for us there were a clear set of reasons.

Why we needed a Penetration Test

We're quite fortunate that Report URI, whilst a small company, has some great people on the team already. I originally started the company because of my interest in application security and I have a little background in programming having done it as a hobby and a job for years, and achieving a BSc (Hons) at university in software engineering. This meant that from the very beginning, any software or processes that I created had a little security knowledge baked in and that's a very good place to start from. As Report URI grew we needed to expand and that's when Troy Hunt invested in the company and joined the team. Bringing more security and software engineering knowledge, Troy also delivered his Hack Yourself First training and soon after I partnered on delivering that too. Things were really looking up on the security and software side of things and then to top all of that off Michal Špaček joined the team! Michal is a senior PHP developer and delivers 2 security focused training courses on TLS and PHP, our language of choice at Report URI. To say that we have a good amount of experience with both software and security is somewhat of an understatement, but why am I telling you all of this?

It doesn't matter how much experience or knowledge you have in your organisation, there's nothing that can match a highly skilled set of independent eyes taking a look at your application. Of course we scrutinise ourselves on an ongoing basis and constantly strive to achieve perfection but we're human and we make mistakes, things slip past us and sometimes you just can't see the wood for the trees. Using DAST and SAST tools, coupled with our internal knowledge, certainly helps us to reduce the chance of having issues, but it certainly doesn't prevent us from having them.

Engaging a Penetration Testing Company

There are so many companies out there that offer pen test services but for me, the choice was pretty easy. For several years I worked at a company called PenTest and knowing the company from the inside as I do, knowing the calibre of testers that they employ and knowing that I can fully trust them, there really wasn't a decision to make at all. We engaged the team from PenTest to conduct a 5 day test against Report URI and whilst that might not sound like much, it's a lot. Having skilled people do nothing but attack an application for that length of time can turn up some pretty interesting things!

One thing that I know from my time in this industry as a penetration tester and as an independent security researcher is that organisations are often not happy about hearing from either. As an independent security researcher I can understand initial hesitations and worry about hearing from someone like me, but penetration testers often encounter similar issues too. It sounds weird but I'm sure that any tester reading this will agree and will probably have experienced the same thing. A company may have engaged you because of a regulatory or legal need to do so, in which case you're ticking a box for them and those tests are never fun. Companies can also engage you because they genuinely want a test but they still treat you like the enemy, like an outsider. If you ask for help you're often met with unwilling responses and whilst you might not think it's a good idea to help a tester, it's the best thing you can do.

A tester is there to look for issues in your application and they have a limited time to do it. The more you help them and the faster they can move, the more likely they are to find issues that exist. If you slow a tester down and they don't find issues it doesn't mean you don't have any issues, it just means you don't know about the issues you have and you can't fix them. Those issues remain in place for an attacker to find. Scope limits on a test are also one of the most annoying things I remember where you can test an application but don't touch A, B, C or this thing, oh, and you can't do this either. Real attackers don't have scope limits and if you want to defend against them, your testers can't have scope limits either.

What was our scope?

Everything in our application was in scope, we wanted the testers to operate as close to real attackers as possible. There are of course certain common sense safety precautions we take, like if they want to steal data from other users they must register multiple user accounts and steal data between themselves. This of course still simulates a real attack but poses no threat to our customers having their data accessed in an unauthorised fashion. The testers also asked for access to our source code for the test which is something that many companies simply would not do. We provided our source code for the test because it allowed the testers to identify and test issues much more quickly. A tester can sit for hours trying to bypass a filter, or they can read your source code and bypass it in 10 minutes. Given what we're trying to achieve, which of those is the better outcome?

Other than this we gave the testers direct contact with myself and Michal so that they could ask any questions they needed throughout the test. Going back to the efficiency of the test, if they needed help with something I didn't want them sat around trying to figure it out when they can just ask us and get going again sooner. The final steps to help them out was to disable our Cloudflare WAF for their source IP addresses and give them information on our internal architecture, again, all in the interest of getting the most out of this test.

The results!

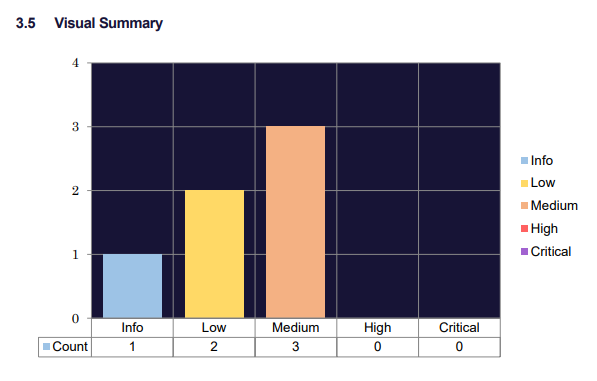

I wasn't expecting to have no issues found and if you're going into a test of this magnitude expecting nothing to be found, you're either incredibly brave or incredibly [something else]! We had a total of 5 issues raised as a result of the test:

This was a lower number of issues than I was expecting for this round of testing but the great news is that we had no High or Critical rated issues. We had issues found that could have been High or Critical, but due to mitigating controls they were not rated as such and they scored much lower.

Cross-Site Scripting (XSS)

Yep, that's right, for the first time ever someone found an XSS vulnerability in Report URI. As a security focused company with all of the skills mentioned above, we're ultimately still human beings and make mistakes. Acceptance of this truth is the reason that we use mechanisms like Content Security Policy, to provide defence in depth, and wow did CSP step right up and save our ass on this one.

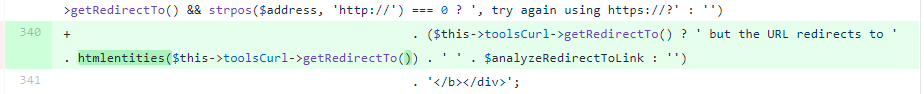

That is un-escaped user input making it right back out into the page and the single cause of what could have been a very serious problem for us. Fortunately for us though we eat our own dogfood and have a good CSP in place.

script-src cdn.report-uri.com api.stripe.com js.stripe.com static.cloudflareinsights.com;

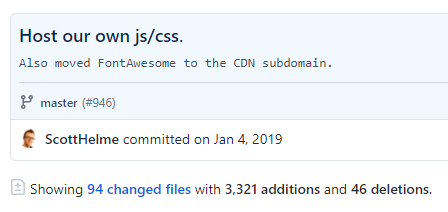

Our script-src directive in CSP does not allow for the execution of unsafe-inline script, by design, and as a result, any inline script tag injected will not be run. You can also see that our external list of sites that can load script is very small, by design, and does not include any of the variety of JavaScript CDNs out there. Inclusion of something like cdnjs.com or code.jquery.com would have allowed the inclusion of outdated libraries from those locations that have potential security issues, allowing an attacker to cause harm. This is the reason we self-host our own JavaScript files but you should also consider specifying a path in your CSP directives if that isn't an option like cdnjs.com/jquery/not-vulnerable-version.

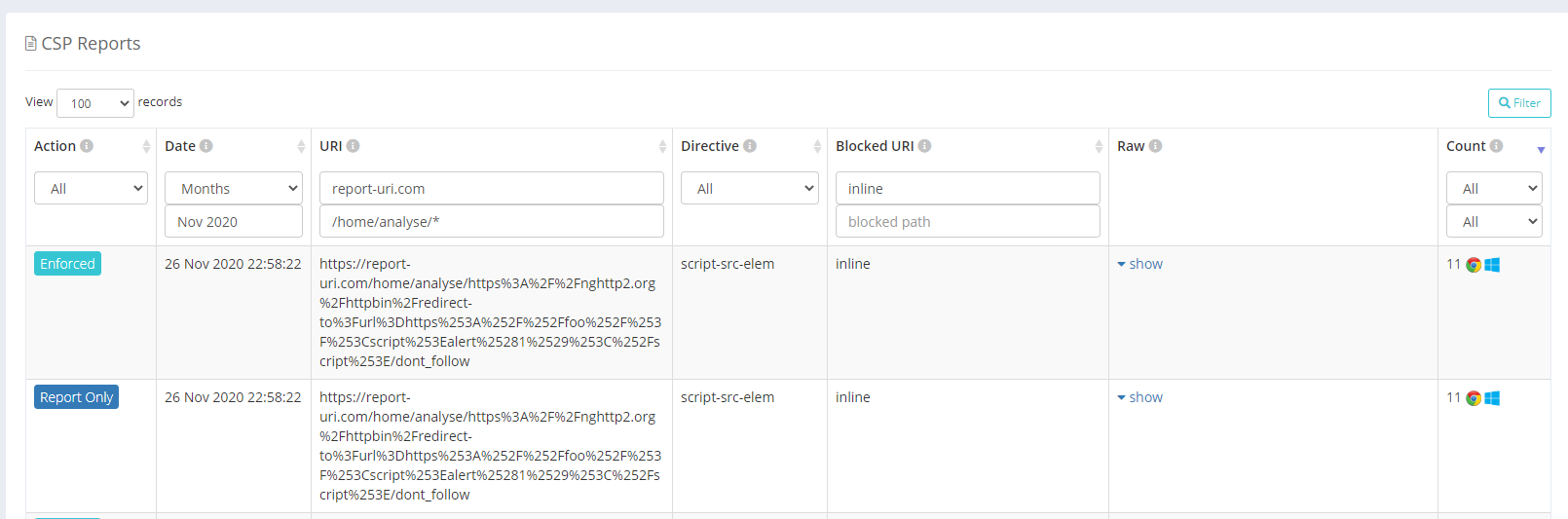

To further improve our own CSP you can see that we already have a CSPRO header deployed using CSP Nonces so, very soon, our CSP will be even stronger. The final thing to look at, of course, is the reports that we got (and I was keeping a very close eye on) during the test where we can see the CSP and CSPRO triggering reports on the attempted execution of inline script.

CSP and reporting work, we're living proof.

NB: manual escaping is a bad idea still lingering in this legacy part of our application! Frameworks that auto-escape for you are the future.

Server-Side Request Forgery (SSRF)

This is an issue that I get reported to me on an almost weekly basis by a variety of beg-bounty bug bounty hunters and it's always the same old story. Some tool said we have SSRF so they reported it. What they don't do is look at our Tools page and see that we are meant to send HTTP requests by design. However, this report of SSRF was different, it was legit!

Server-Side Request Forgery is when you can make a server issue a request that you instructed it to make. Our tools are designed to help people by scanning or analysing a provided domain and are, by design, essentially SSRF. We have some very strict limits on what you can do with this capability and of course somebody could put an IP address in to scan too. If you put an internal IP address in there you could get us to scan ourselves internally (the 'real' issue with SSRF) and that could allow you to look into places you shouldn't be looking. We had thought about this though and we already had protections in place to stop this from happening. Or, so we thought...

$flags = ($this->allowPrivateReservedIpRanges ? 0 : FILTER_FLAG_NO_PRIV_RANGE | FILTER_FLAG_NO_RES_RANGE);We're using the allowPrivateReservedIpRanges flag to enable internal IP addresses in our dev environments for testing but in production we use two PHP filters called FILTER_FLAG_NO_PRIV_RANGE and FILTER_FLAG_NO_RES_RANGE to filter private and reserved IPv4/IPv6 ranges respectively. The problem is, it turns out you can bypass these filters using a funky trick of embedding an IPv4 address inside an IPv6 address.

POST /home/analyse_url/ HTTP/1.1

Host: report-uri.com

-samesite=1; __Host-report_uri_csrf=25fd91d7b39efb3b14e24555bbef7cb3

url=http%3A%2F%2F[0:0:0:0:0:ffff:10.138.196.205]:6379%2Ftest999&follow=fo

llowInside there you can see [0:0:0:0:0:ffff:10.138.196.205] as the host being scanned which is an internal IP on our DigitalOcean VLAN for one of our other servers. The idea is that you can't do this because we don't want you to and our filters should catch this, but this passed through our filters and allowed the testers to scan our internal servers. This allowed the tester to port scan us internally by trying many different internal IP address and port combinations to look for other interesting services.

Checking 10.138.196.205:6377 -> port CLOSED

took: 1.627873s

Checking 10.138.196.205:6378 -> port CLOSED

took: 1.623326s

Checking 10.138.196.205:6379 -> port OPENED

took: 1.067102s

Checking 10.138.196.205:6380 -> port CLOSED

took: 1.611090s

Checking 10.138.196.205:6381 -> port CLOSED

took: 1.609535sWe run a strict set of firewall rules, even internally between servers, and servers that do not need to communicate with each other are not permitted to communicate with each other. This immediately limited the scope of any possible attack because the tools are run on our www servers which are some of our most restricted as they have no reason to communicate with anything other than our Redis session cache, which is the one identified in the port scan above. Due to us using an up-to-date version of curl and properly restricting the protocols that curl can use, there was no ability to talk our session cache and the ultimate impact of the SSRF issue was to port scan part of our internal infrastructure.

To remediate this problem we had to implement additional filters of our own to catch the problem that Michal has lovingly named "IPv4-in-IPv6-in-tortilla-in-tacos" and now we are properly limiting the scanning of internal endpoints. We have also taken the decision to limit the ability of our tools to communicate with ports other than HTTP:80 and HTTPS:443 which isn't something we wanted to do but felt was a good choice. We've monitored our data and whilst there is a small % of users that will be affected by this, we hope that everyone can understand the trade-off. Should anything like this crop up again in the future, the limitation of ports will reduce the impact of an attack and along with our other controls like scheme/protocol and upgraded IP filters, we should be OK.

Denial of Service (DoS)

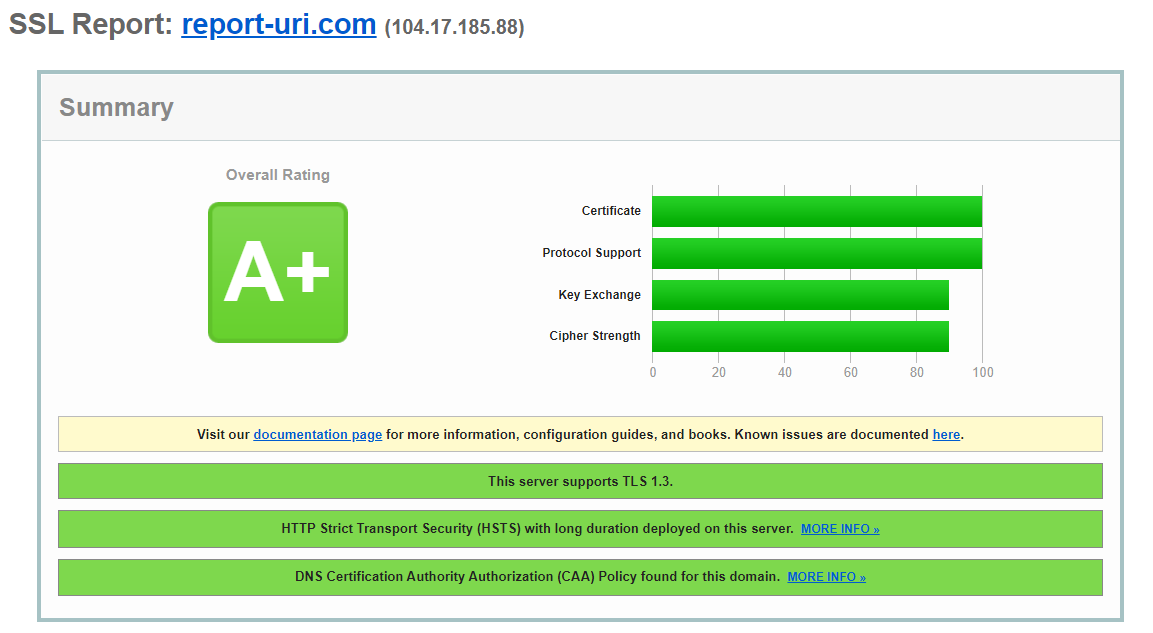

Another fine point raised in the test was a possible DoS vector against our tools linked to the outbound requests they make. Our timeout is very relaxed at 10 seconds and this could allow an attacker to consume a lot of resources on our servers by intentionally causing us to make many requests to slow servers. We do have rate limiting in place at Cloudflare, which was disabled for the testers, so this would go some way to mitigating this problem, however, 10 seconds is very relaxed so we decided to tighten it up a little.

Outdated Software Detected

Like many sites we depend on a few JS libraries and some of the versions we use were flagged in the report.

jQuery 3.4.1

jquery-migrate 3.1.0

noUiSlider 14.1.1At the time of writing there is 1 known issue with that version of jQuery but not in the others, but we updated them all anyway. We now use the following:

jQuery 3.5.1

jquery-migrate 3.3.2

noUiSlider 14.6.3Insecure SSL/TLS Cipher Suites

As we use Cloudflare for reverse proxying, we do not control the cipher suites presented to an external user and depend on Cloudflare to manage the selection of cipher suites used. At the time of writing we achieve an A+ grade on the SSL Labs test and will work to ensure this remains the case going forwards.

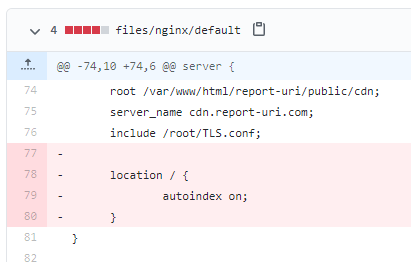

Information exposure through Directory Listing

We had directory listing enabled on our CDN subdomain largely to allow users to look at versions of the Report URI JS library that we host ourselves but this wasn't strictly necessary. The directory listing itself caused no issues and was there by design, however upon review we decided to remove to avoid any confusion in the future.

Reflecting on the findings

Overall I have to say I'm really happy with how the test went and our results. Yes we had a bit of a bad issue with the XSS and the the filters let us down on the SSRF issue, but both of these came about in our tools which are far from the focus of our service. We have some incredibly extensive and complex parsing code focused around receiving significant quantities of data from unauthenticated and untrusted sources and there wasn't a single issue found in any of that, despite extensive testing. We process billions of JSON payloads per month and millions of XML payloads, fetch data from external services to enrich those reports and do an unthinkable amount of database queries, all of which I'm very happy to say had no problems found. Overall I think it's fair to say that we did have some issues, but they weren't so bad in the grand scheme of things, thanks mostly to other mitigating controls.

I've said before many times, publicly, that I don't expect the companies I use to be perfect and have no issues, I expect them to resolve issues quickly and transparently. That's precisely what I want to do here and why I'm being completely open and transparent about these issues and to show how quickly we moved to resolve them. In the interest of showing others that no matter how hard you try, problems can and do happen, it's not being perfect that matters, it's responding quickly and properly.

To do as much as we can here to show others that security issues are not something to hide away and keep a secret, we're going to go one step further and publish the penetration test report that we received. The whole document, unaltered and unredacted, for anyone to read. I'm devastated that we had issues, of course, but I'm proud of how well we performed in responding to them.