I've seen this mentioned a few times now and I think it's time we had some solid facts on why this just isn't the case. Like many restrictions around deploying HTTPS have recently bitten the dirt, backwards compatibility is also another one we can strike off the list.

Recent advancements

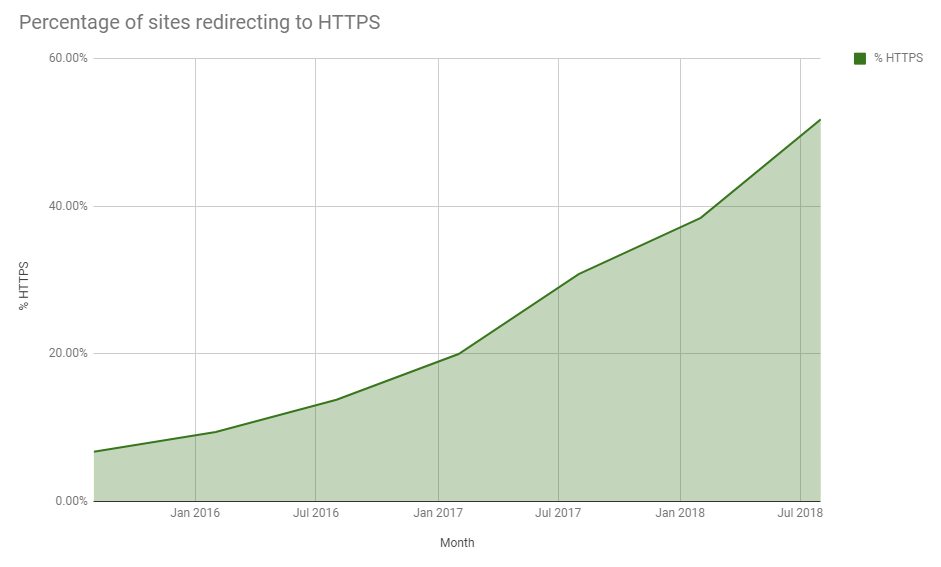

Over the last few years we've seen huge growth in the deployment of HTTPS across the web. Providing security of the connection, and often better performance, my Aug 2018 security report showed that we've continued our adoption of HTTPS at a large scale.

I've talked about why we even need more phishing sites on HTTPS and just for fun, I hacked an Amazon Dash Button to issue a new HTTPS certificate for my website. The point is, HTTPS isn't slow or expensive any more, so why aren't sites supporting it? This blog is about focusing on one specific reason why and debunking it.

Backwards Compatibility

Have you ever heard this?

"I don't want to support HTTPS on my site because [insert ancient browser/client] can't do HTTPS"

I have. A few times actually, but also just now...

Don't need it. Excludes many older browsers.

— Jason Scott (@textfiles) December 27, 2018

I've heard it a few times and while I might be tempted to poke fun at how crazy this idea is, I'd like to prove why it's wrong. Let's not talk about the fact that we must go back countless years to find a client that can't do HTTPS at all, because the argument may still remain that they, in miniscule numbers, do still exist and for whatever reason may come to your site. Let's not talk about the fact that a browser that can't do any form of HTTPS probably can't do JavaScript either, not to mention countless problems with CSS and HTML too. We also need to think about what the client is doing on our site, because there's no social media, no banking, no login or forms or data/information of any kind if we're not using a secure connection. Let's skip past all of those issues that will likely break your site or render it useless and talk about how you can still support HTTPS and be backwards compatible with the client. There are 3 ways to tackle this and I will start with the least complex solution and work up to the most complex and probably best solution. (I say most complex, but they're all simple)

HSTS

I've talked about HSTS before so if you need the background you can check my blog on HSTS - The missing link in Transport Layer Security. In short, the problem with HSTS is that it can only be served over HTTPS. If you have a site-wide redirect to HTTPS in place then you're going to break clients that can't do HTTPS.

HTTP/1.1 301 Moved Permanently

Location: https://example.com

...

HTTP/1.1 200 OK

Strict-Transport-Security: max-age=31536000A simple solution to this problem is to have a single subresource on your page loaded over HTTPS, this could be something tiny like a single-pixel image. The response for the image can set the HSTS header because it was loaded over HTTPS. That means that the image will only load if the client can do HTTPS, thus only setting HSTS and forcing HTTPS for capable clients. People may also suggest HSTS Preloading but that requires a 301 redirect in place from HTTP -> HTTPS so it isn't an option here sadly. Either way, the method above works and allows us to get HSTS onto the client and full HTTPS. No breakage.

CSP + UIR

Another awesome header, Content Security Policy, is also something I've covered before so there's more reading if you'd like it here Content Security Policy - An Introduction. A very specific feature of CSP is Upgrade Insecure Requests and it allows you to force the upgrade of insecure subrequests on a page to be secure. This achieves basically the same outcome as the HSTS suggestion above, except you don't need to load a subresource on the page over HTTPS, you simply issue the CSP header with UIR configured.

HTTP/1.1 200 OK

Content-Security-Policy: upgrade-insecure-requestsThis will force all assets on the page to be loaded over HTTPS and any/all of them can then set the HSTS header and deliver the policy to the client.

HTTP/1.1 200 OK

Strict-Transport-Security: max-age=31536000If the browser has support for CSP and the UIR directive then it must have support for HTTPS so again, only clients that are capable will get the upgrade and lock in HSTS. No breakage.

Listen to the browser

This last one is probably the best way to do this but honestly, I'd be tempted to do all 3 if I found myself in the situation of needing to support HTTP for 20+ year old clients. Modern browsers will send an HTTP Request Header along with HTTP requests to indicate that they are, in fact, capable of HTTPS.

GET / HTTP/1.1

Host: example.com

Upgrade-Insecure-Requests: 1The Upgrade-Insecure-Requests HTTP Request Header allows the browser to tell the server that not only is it capable of HTTPS but that it wants HTTPS. Upon receiving an HTTP request from a client that sets this header in the request, the server can respond with a 301 to HTTPS instead of a 200 with the content. Again, only capable clients will set this request header so you can offer HTTPS in a perfectly backwards compatible fashion. No breakage.

Another one bites the dust

If we want to see widespread adoption of encryption across the web then we have to encourage people to deploy it and remove barriers to adoption. From Let's Encrypt to SEO boosts and a whole community building tools, we are getting there and debunking reasons we can't/shouldn't do HTTPS is part of that journey.

I know it might seem ridiculous to support a browser that can't support HTTPS, but it can be done. They literally can't do anything else online from banking to social media, reading the news or their emails, or even login to pretty much anything with a password field, but, it can be done so it's not a reason for refusing to support HTTPS.

If you're interested in a little further information related to the topic above then I have blogs on Migrating from HTTP to HTTPS? Ease the pain with CSP and HSTS! and Fixing mixed content with CSP too. There's also heaps of other awesome stuff out there:

https://httpsiseasy.com/ - a few short videos on how easy it can be to do HTTPS.

https://crawler.ninja/ - the source for the graph at the top and how many sites support HTTPS.

https://istlsfastyet.com/ - a site debunking more technical problems with HTTPS.

https://whynohttps.com/ - a list of the largest sites not yet redirect to HTTPS.

https://doesmysiteneedhttps.com/ - more info on why you need HTTPS.

http://httpforever.com/ - the worst website in the world.