SPDY, pronounced 'SPeeDY', is a web protocol developed by Google that is primarily aimed at reducing page load time and providing better security. With the latest stable release of nginx featuring SPDY 3.1 support, it's time for an upgrade!

Let's Get SPDY!

You can head over to the nginx site and find the latest stable source release. At the time of writing the latest stable build is 1.6.0 and mainline is 1.7.0. Personally, I choose to run the latest stable build but if you want bleeding edge features and bug fixes, you can try the mainline build at your discretion. If you're running Ubuntu you can use apt-get to install or update to the latest stable version of nginx.sudo apt-get install nginx -f

When prompted about existing config files, you can hit enter to keep your existing configs which is the default option. Once done, you should be able to see the latest version of nginx has been isntalled.

nginx -vnginx version: nginx/1.6.0

Earlier versions of nginx didn't build the SPDY module by default and had to be compiled from source, but you can ensure that SPDY is enabled using the following command.

nginx -V

Note the upper case 'V' this time and you need to look for "--with-http_spdy_module" in the output. Now that nginx is all sorted, quickly check that your OpenSSL version is up to date. You need at least version 1.0.1 and I'm pretty sure everyone should have that covered after Heartbleed, I hope, and a build date late enough to cover against the latest vulnerability in OpenSSL, CCS.

openssl versionOpenSSL 1.0.1 14 Mar 2012

The basic openssl version command doesn't give terribly useful output, so you can use the -a flag to get a bit more detailed output.

openssl version -a OpenSSL 1.0.1 14 Mar 2012 built on: Thu Jun 12 13:42:14 UTC 2014

Any build date after April 7 2014 covers you for Heartbleed, June 2 2014 cover your for CCS and either of those more than covers you for our SPDY requirements, which are the Next Protocol Negotiation (NPN) extension. Now we have everything covered, it's time to pull up a chair and actually enable SPDY for our site/s. Open the virtual host file for the site in question and find the listen directive. It should look something like this.

listen 443 ssl;

You need to change it to add the SPDY protocol.

listen 443 ssl spdy;

That's it. Seriously! Just save the file and reload your nginx configuration.

sudo service nginx reload

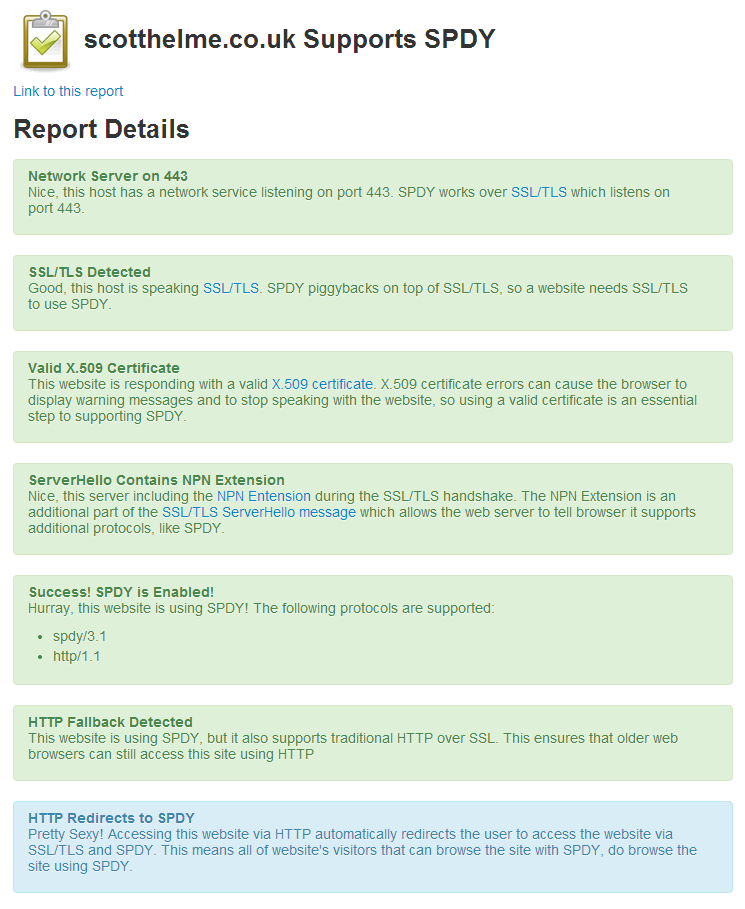

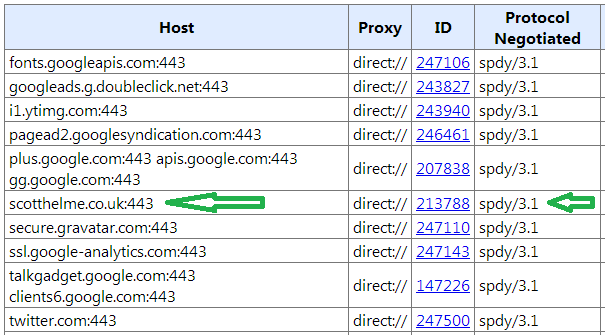

You're now SPDY enabled! You can head over to the handy SPDY check website and punch in your domain to test that everything has worked and you're serving using SPDY. If you use Google Chrome, you can also use the SPDY information page chrome://net-internals/#spdy and look for the spdy/3.1 protocol in use on your site.

But what does SPDY actually do?

SPDY has a few nice features and you can read a lot more in the full whitepaper about it. I will go over the main points briefly.Multiplexed Streams

One of the biggest benefits of SPDY is that it allows for an unlimited number of requests to be made over a single TCP connection. If a page requires 10 assets to be loaded then that would result in 10 connections to grab all of the assets. Using SPDY, all of these 10 requests are interleaved into a single channel. This removes the overhead of each TCP connection resulting in a far higher efficiency due to the lower number of connections. This also presents an interesting dilemma when addressing domain sharding. Sites can split assets across multiple domains to increase the speed at which they can be downloaded. Whist the RFC states that browsers should never maintain more than 2 concurrent connections to a domain, almost all now disregard this and can open as many as 6 concurrent connections per domain to download assets faster. In my example page with 10 assets, this could result in 6 concurrent connections to grab the first 6 assets from one domain, alongside another 4 connections to another domain for the rest. Because SPDY only works on a per domain basis, domain sharding can now potentially be detrimental as the full benefits of SPDY can't be achieved. A SPDY connection still has to be made to each domain which results in additional overheads being incurred that could be avoided if all assets are loaded from a single domain.Request Prioritisation

With the ability to interleave an unlimited number of streams over a single TCP connection, we resolve the request serialisation problem, but introduce an issue with congestion. On a congested link with limited bandwidth, interleaving many requests could result in them all being delayed. To resolve this issue, SPDY has introduced request prioritisation. We don't want a really high value asset being downloaded at the same reduced rate as other non-critical assets, so we assign a priority to it. The client can still request all of the assets that they need, but rest assured that the high priority assets will be delivered first, followed by less important assets later.HTTP Header Compression

Another great benefit of SPDY is that it compresses HTTP request and response headers. These headers are normally sent in human readable text which results in wasted packets and bytes being sent over the wire. Further to this, SPDY also keeps tracks of any headers that have already been sent and avoids sending duplicate headers in subsequent requests or responses.Fewer workers for the same task

Nginx normally has one master process and several worker processes. The master process handles configuration and maintains the worker processes while the worker processes are responsible for actually handling requests to the server and serving content. The number of worker processes is usually equal to the number of CPU cores available on the server, as they are single threaded, and they can handle a fixed number of connections determined by worker connections. This means that a server can only ever handle, at most, (worker_processes * worker_connections) client connections. As I mentioned earlier, browsers traditionally only held open 2 connections per domain but we can now see that number escalating up and over 6 connections. On a typical dual core machine with the default worker_connections value of 512 that means with traditional HTTP/HTTPS we can only serve to 170 clients at a time using 6 connections each. With SPDY, each client will only ever use a single connection meaning we can serve to 1,204 clients using the same configuration. With the reduced number of connection overheads per client, this means we can deliver all of the requested content to any given client whilst consuming less CPU and memory resource on the server side, improving overall efficiency and allowing us to host the same content with less resources consumed. At this point, the bottleneck on your server is less likely to be nginx and more likely to be on the back end or an IO bottleneck.Widespread Support

Support for SPDY is pretty widespread. If you're using the latest versions of Chrome, Firefox or Internet Explorer then you will have SPDY 3.1 support out of the box. You can check more details here on the Wikipedia page. Even if your browser isn't SPDY capable, it doesn't really present a problem. The server will just respond using HTTP/S as before, so there's little reason not to upgrade to SPDY. Because SPDY is a kind wrapper for HTTPS it doesn't require any changes to your application or code. Bonus!